The browser is eating itself

What is a browser?

- It’s a screen where we can display stuff.

- It does I/O (e.g. keyboard, mouse, network).

- It’s a terminal with a Turing complete language (JavaScript).

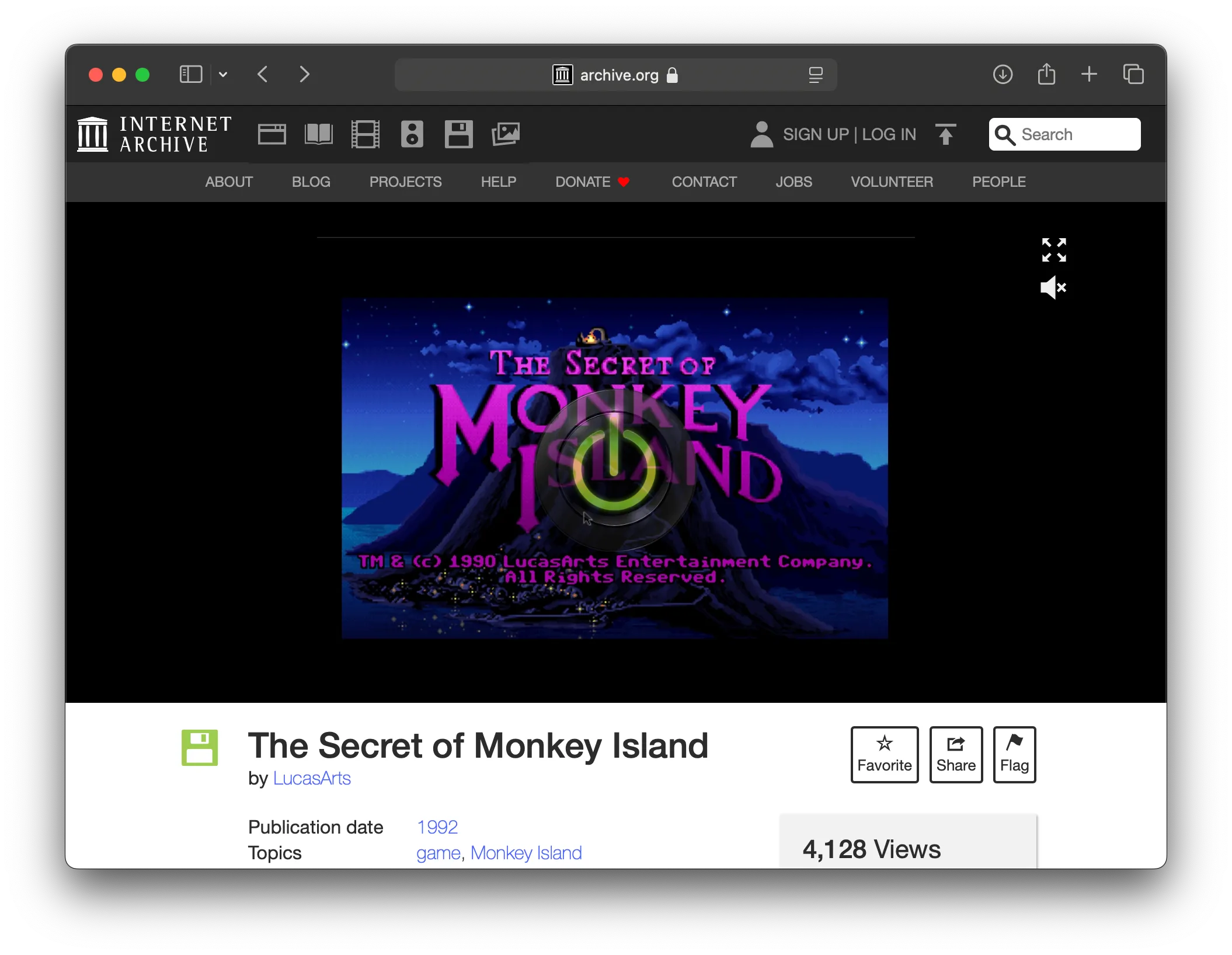

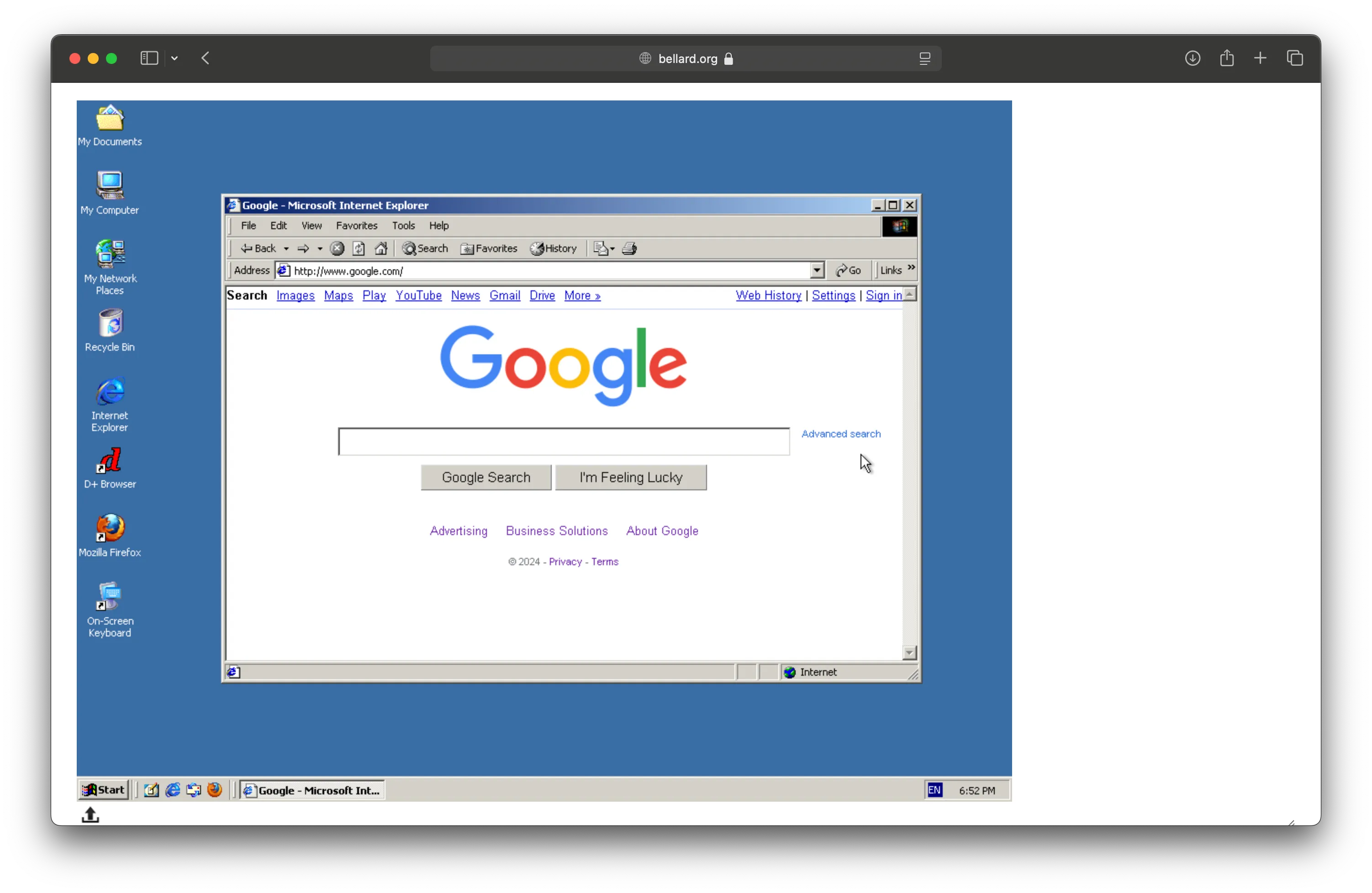

- It’s an emulator for vintage games and operating systems12;

- which means you can run a browser inside a browser.

The browser started as an interconnected document viewer. But the reality is that the browser is now a Turing complete machine. The browser is no longer a browser but a virtual machine.

How did we arrive here?

- The first browser was WorldWideWeb in 1990 (Tim Berners-Lee).

- The W3C (World Wide Web Consortium) was founded in 1994.

- The idea of JavaScript came in 1995 with another browser called Netscape 2.

- DHTML (Dynamic HTML), in 1997, enable making changes to a loaded HTML document

- In 1998, the DOM becomes a standard.

- From 2000-2006, XMLHttpRequest is invented and standardised; it’s the AJAX revolution

- In 2008, HTML5 changes everything with the canvas element.

- Web Workers API is introduced in 2009 and brings us concurrency.

- ES6 (ECMAScript 6) makes JavaScript pleasant enough to work with in 2015.

- In 2017, WASM is a new silent revolution.

- 2020 gives us the File System Access.

Thirty years! Thirty years, and we still don’t know how to make a website. Should I pick Angular, React, Vanilla or Svelte? To VDOM or not to VDOM? So much choice. And it’s about to get worse. Let me tell you why.

What is the difference between these two HTML files (loaded with an iframe)?

One is 100% HTML, and the other is an interface drawn on a WebGL canvas. The latter is partly ditching HTML. If you don’t look under the hood, there is no difference. Okay a giveaway might be text selection. Why would you want to get away from HTML? The cool thing about going away from HTML is that we can try new ways of doing things. For example, we could reimagine how we engineer and code graphical user interfaces. There are two schools of thought in game development about creating user interfaces. One is immediate mode345, and the other is retained mode. The DOM has a retained mode API. It’s the most popular way of working. You create an element, bind it to an object, and insert it in the tree. Elements have some internal state and notify you of changes via events and callbacks. When you receive an event, you update your application state.

Consider the following pseudocode as an example:

const state = {

buffer: "Type here",

name: "",

};

const textElement = new Text("Hello {state.name}");

const inputElement = new InputText(state.buffer);

inputElement.addEventListener("change", updateBuffer);

const saveButtonElement = new Button("Save");

saveButtonElement.addEventListener("click", updateName);

const updateBuffer = (event) => {

state.buffer = event.value;

};

const updateName = (event) => {

state.name = state.buffer;

textElement.value = state.name;

};We end up with problems in large applications because we need to deal with multiple states: the main state of the application and the internal state of those DOM widgets. React’s job is to do this cache invalidation for you at a performance cost. Wouldn’t it be easier to redraw everything rather than cleverly finding out what has changed and trying to deduce the minimal number of redraws needed? This is what immediate mode is about.

Now consider the following pseudocode for an immediate mode version:

const state = {

buffer: "Type here",

name: "",

};

// The loop would realistically be handled by requestAnimationFrame

while(true) {

Text("Hello {state.name}!");

let buffer = "";

InputText("string", state.buffer);

if (Button("Save")) {

state.name = state.buffer;

}

}When compared, the IMGUI pseudocode has the following properties:

- A single path, in immediate mode, the widgets are recreated at every frame.

- No allocation of widgets as they don’t persist from one draw to another.

- A unified control flow (no callback or event dispatches).

- No syncing is needed between our state and the widget’s internal state.

- UI as code, not UI as data.

One quick argument against this method is the waste of resources: we redraw the screen all the time. Computers and graphic cards are fast these days; and you do the same when playing a video game. Gaining simplicity is a good trade-off.

It’s not the first time we have tried to do things differently. See how we used to embed Flash applications, going away from HTML and the DOM:

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Strict//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd">

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<title>A typical Flash website</title>

</head>

<body>

<object

data="flash-program.swf"

type="application/x-shockwave-flash"

width="1025" height="800">

<param name="quality" value="high" />

<param value="flash-program.swf" name="movie" />

</object>

</body>

</html>And then how we dealt with SPAs where the document is dynamically computed:

<!doctype html>

<html lang="en">

<head>

<title>A typical React SPA</title>

</head>

<body>

<noscript>

You need to enable JavaScript to run this app.

</noscript>

<!-- React will render here -->

<div id="root"></div>

</body>

</html>Running applications directly on a canvas element, like Flutter5, Clay6 or egui7 does, might be the next frontier. Today’s GUI paradigms were developed at Xerox PARC in the 1970s alongside the invention of object-oriented languages. The DOM was invented during a period of popularity for object-oriented programming (and XML). The point here is that there are other options to explore than how the DOM operates today. In this area, React does a great job at providing developers with an immediate mode-style API on top of the DOM via abstractions. However, the DOM could curb innovations because of its age and roots.

“Every browser is essentially a different platform. There are differences in how basic HTML elements are implemented, there are literally thousands small quirks in behavior coming from decades of legacy code that needs to be supported. If you ever worked on a long-lived web application, you for sure have encountered a lot of code written just to achieve the same behavior on IE, Safari and the rest (notable example: clicking a button in Safari doesn’t give it focus). What if… you could ditch the platform and become the platform?” Red Otter documentation

What about accessibility? The browser and the DOM have a few built-in assistive technologies. Although Flutter uses a canvas, it cleverly maintains an invisible tree of DOM elements for accessibility. I’m sure Ai will bring us new accessibility tools in the future, too. I imagine an interface where Ai can read and understand what’s on the screen and let users interact by voice or typing instructions and questions. I’m confident some innovations in this area can happen by creating new runtimes8. Some applications are already taking a hybrid approach, like Google Docs9 and Figma10, where UI widgets are DOM elements, but the main content is rendered on a canvas.

In the 00s, we hyped about perfectly structured and semantic HTML, micro-data, and optimisation for search bots. Now, Ai seems to understand documents perfectly well without all that. Moreover, the rise of LLMs brings us new interaction patterns: we chat with content, and users don’t have to interact with your website to get an answer. Are we going to enter a post-document or post-HTML world? After Single Page Applications (SPA), we will have Single Canvas Applications (SCA) powered by WebGPU and WebAssembly blobs. Maybe. Is the DOM an old technology? I bet we will keep the DOM for simple stuff and content-heavy sites (e.g. Wikipedia) and have SCA for more advanced and complex applications (e.g. photo, video editors, real-time data dashboards). We’ve been there before with Flash. But this time, we have open technologies and more than one runtime. There is an analogy with rail deregulation. The browser is the rail and the infrastructure. The canvas is the intermodal freight container of content that novel UI frameworks or renderers will use to display things on the screen. Is it time to bury the “right-click, show source” one and for all?

Footnotes

-

Article by Sean Barret in game developer magazine (page 34) . ↩

-

Exploring the Immediate Mode GUI Concept for Graphical User Interfaces in Mixed Reality Applications. ↩

-

Clay (short for C Layout) is a high performance 2D UI layout library that can compile to HTML. ↩

-

egui is an immediate-mode GUI library written in Rust. egui runs both on the Web and natively. On the Web, it is compiled into WebAssembly and rendered with WebGL. ↩

-

Painting the Future — Canvas-Based Rendering & Accessibility Today and Tomorrow. ↩